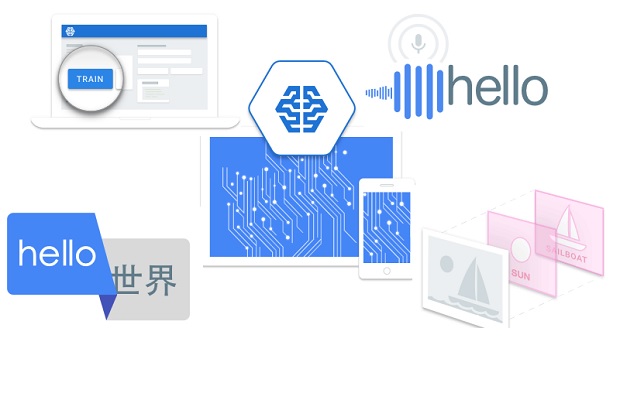

Google has made its Cloud Machine Learning platform available to developers today, giving them access to the platform that powers Google Photos, Translate and the Google Inbox app.

The move means that developers can build Google’s translation, photo, and speech recognition APIs into their own apps utilising the Cloud Machine Learning platform moving forward.

The platform was launched at NEXT 2016, Google’s Cloud Platform conference and is now available in limited preview.

“Cloud Machine Learning will take machine learning mainstream, giving data scientists and developers a way to build a new class of intelligent applications,” Fausto Ibarra, Google’s director of product management wrote in a blog post. “It provides access to the same technologies that power Google Now, Google Photos, and voice recognition in Google Search as easy to use REST APIs.”

Google’s Cloud Machine Learning platform consists of two parts: one that lets developers build machine learning models from their own data, and another that offers developers a pre-trained model.

To train these machine learning models developers can take their data from tools like Google Cloud Dataflow,Google BigQuery,Google Cloud Dataproc, Google Cloud Storage, and Google Cloud Datalab.

“Cloud Machine Learning will take care of everything from data ingestion through to prediction,” the company says. “The result: now any application can take advantage of the same deep learning techniques that power many of Google’s services.”

Google isn’t the first major company to introduce a machine learning platform, following in the footsteps of Microsoft Azure and Amazon Web Services who launched their own platforms in 2014 and 2015, respectively.

Rise of the machines

Google has been merging the research efforts with search, an indication of the priority of machine learning inside the company. The division now has access to the most critical and lucrative part of Alphabet’s business- search advertising.

Google’s decision to replace Singhal with Giannandrea signals that AI (e.g. Google Now predicting what users want before theyb search for it) will play a larger part in the future of search.

In his current role as engineering VP Giannandrea oversees ‘deep neural networks’ of hardware and software that analyse vast amounts of digital data.

These networks have been used in the past to identify objects in photos without human input (eg. the AI learned what an image of cat was by matching thousands of simlar images found across the web). The technology can also recognise commands spoken into a smartphone, and respond to Internet search queries.

As the tech evolves, some complex tasks (and even descisions) can be performed better and faster than humans, at a much larger scale.