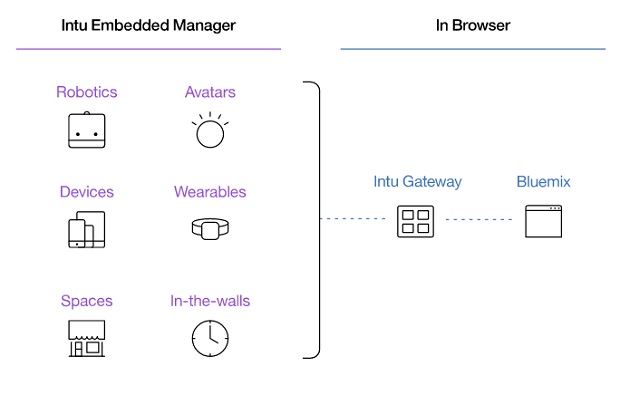

IBM has launched Project Intu, an open platform designed to let developers embed its AI “Watson” functions into various end-user device form factors, offering a next generation architecture for building “cognitive-enabled experiences”.

Project Intu, in its experimental form, is now accessible via the Watson Developer Cloud and also available on Intu Gateway and GitHub.

Vonage, a cloud communications company for business, has recently collaborated with IBM’s Project Intu to allow developers to embed Watson functions using the Nexmo Voice API.

The partnership will improve cognitive interactions by bringing real-time, contextual insights to business voice communications using Nexmo’s Voice API’s support of WebSockets. Here is a 90 second video outlining the benefits the Voice API will bring to IBM’s Project Intu and Watson.

Vonage has also updated its voice API platform by connecting the Nexmo Voice API to WebSockets to allow customers to connect PSTN calls to WebSocket endpoints. This feature will allow AI engines and bots to be conferenced into meetings to speed up the decision making process. Here is a blog post outlining the new features.

Developers can simplify and integrate Watson services, such as Conversation, Language and Visual Recognition, with the capabilities of the “device” to, in essence, act out the interaction with the user. Instead of a developer needing to program each individual movement of a device or avatar, Project Intu makes it easy to combine movements that are appropriate for performing specific tasks like assisting a customer in a retail setting or greeting a visitor in a hotel in a way that is natural for the end user.

Typically, developers must make architectural decisions about how to integrate different cognitive services into an end-user experience – such as what actions the systems will take and what will trigger a device’s particular functionality. Project Intu offers developers a ready-made environment on which to build cognitive experiences running on a wide variety of operating systems – from Raspberry PI to MacOS, Windows to Linux machines, to name a few. As an example, IBM has worked with Nexmo, the Vonage API platform, to demonstrate the ways Intu can be integrated with both Watson and third-party APIs to bring an additional dimension to cognitive interactions via voice-enabled experiences using Nexmo’s Voice API’s support of websockets.

The growth of cognitive-enabled applications is sharply accelerating. IDC recently estimated that “by 2018, 75 percent of developer teams will include Cognitive/AI functionality in one or more applications/services.” * This is a dramatic jump from last year’s prediction that 50 percent of developers would leverage cognitive/AI functionality by 2018.

“IBM is taking cognitive technology beyond a physical technology interface like a smartphone or a robot toward an even more natural form of human and machine interaction,” said Rob High, IBM Fellow, VP and CTO, IBM Watson. “Project Intu allows users to build embodied systems that reason, learn and interact with humans to create a presence with the people that use them – these cognitive-enabled avatars and devices could transform industries like retail, elder care, and industrial and social robotics.”

Project Intu is a continuation of IBM’s work in the field of embodied cognition, drawing on advances from IBM Research, as well as the application and use of cognitive and IoT technologies. Making Project Intu available to developers as an experimental offering to experiment with and provide feedback will serve as the basis for further refinements as it moves toward beta.